¶ AI Assistants

You have the ability to integrate AI within your RevCent account through the use of a RevCent AI Assistant. Add an AI integration to RevCent, create a RevCent AI Assistant and take advantage of AI automation.

¶ How It Works

With an AI assistant, you do not communicate directly with the AI. Instead, the RevCent AI Assistant is activated based on trigger settings and runs autonomously in the background, communicating with the AI based on the design of a thread. Depending on the trigger, different options will be available to limit the assistant to only run on certain conditions and filters.

- The AI Assistant is triggered.

- The AI follows the workflow you design in the Thread Builder.

- Orchestration with the AI is performed automatically by RevCent.

- Fully automate tasks within your ecommerce business.

¶ Getting Started

- Create an AI account with an available third party(s): OpenAI, Gemini, OpenRouter or xAI.

- Add a payment method in your third party account to increase limits.

- Create a third party integration, selecting the third party.

- Enter the required credentials depending on the third party.

- Save your third party credentials and available models will appear.

- Select the third party AI model you wish to use and save your integration.

- Go to the AI Assistants page in RevCent.

- Click the Create AI Assistant button.

¶ Pricing

- AI Model Pricing: Please check with the third party AI and the pricing for the model you selected. RevCent is not responsible for charges you incur within your third party AI account. However, RevCent does provide the ability to limit token usage via usage limits.

- RevCent Pricing: RevCent charges a total of $0.02 per minute of AI time consumed. Time consumed is the total amount of time RevCent spends communicating back and forth with the AI model. Time consumed is highly dependent on the design of your assistant thread, specifically the number of steps and AI response time per step.

¶ General Settings

Below are the general settings that apply to an AI assistant.

¶ Name

You will want to give the AI Assistant a name that is easy to read and different than other assistants.

¶ Description

The description should briefly describe what the AI Assistant does as if another human is reading it and doesn't know. This is useful when triggering an AI Assistant from another AI Assistant.

¶ AI Integration

Select the AI third party integration you already created in RevCent. This should have been done as part of the Getting Started guide.

¶ AI Instructions

You must provide the clear instructions for the AI. The AI needs to know what its purpose is, so it will read the initial instructions you set and act accordingly. The instructions will be passed to the AI as the role: system.

AI instructions should be a broad explanation of the AI Assistants' overall purpose. However, you do not want to give explicit instructions on what to do, that it what the AI Thread steps are for.

Examples:

- “You are an AI Assistant that analyzes declined sales.”

- “You will be reading customer notes and generating an overall sentiment based on the note contents.”

¶ Trigger Settings

The trigger is what activates the assistant to run autonomously. Depending on the trigger, additional settings may appear.

¶ Trigger

There are multiple triggers available:

- Event: The AI Assistant is triggered when a specific event occurs within your RevCent account.

- On Demand: The AI Assistant is triggered either via API, Web App or as a System Tool within another AI Assistants' thread.

- Schedule: The AI Assistant is triggered on a fixed schedule.

¶ Trigger: Event

The AI Assistant is triggered when a specific Event occurs. You can filter the events to limit only specific events from triggering the AI Assistant. You can also enable the assistant to be triggered within the web app by enabling Web Access.

¶ Event

Select the specific event notation and the AI Assistant will be automatically triggered when said event occurs.

¶ Delay

The period of time to wait after the event occurred until the assistant thread officially starts. Default minimum is 1 minute, maximum is 30 days.

¶ Filters

You have the option to only activate the assistant based on attributes of the item specific to the trigger. Before triggering the AI Assistant from an event, RevCent will check any filters to see if the item matches said filters. This enables you to only trigger the AI Assistant for items specific to a campaign, shop, metadata, etc.

¶ Filter Function

In conjunction with base filters, you can also select a function to further filter which item will trigger the assistant.

The function will be provided with the item data via event.data.item_details. Please view example events to view what the function will receive dependent on the item type that triggered the event.

Important: The function must return a single term: either 'pass' or 'fail'. If 'pass', the assistant will run. If 'fail', the assistant will not run. Default is 'pass'.

Pass

Example function return for a pass:

callback(null, 'pass');

Fail

Example function return for a fail:

callback(null, 'fail');

¶ Max Runs Per Item

The maximum number of times the specific AI Assistant can be triggered for the same item. Recommended set 1.

Note: Max runs is per assistant and item. An item can have more than one assistant triggering it.

Important: Depending on the trigger and what the AI is doing, the same item can be processed multiple times. This is why the max run setting exists and should almost always be set to 1. For example: If you are triggering the assistant to run on a declined sale, and the AI is going to reprocess the sale, if the AI's attempt to reprocess results in a decline, the AI will be triggered once again for the same sale. This is because the trigger would fire once for the original declined sale and again for the AI's declined sale, which you could avoid by setting max runs to 1. Having this setting greater than 1 can cause multiple attempts when you only intend to attempt once.

¶ Min Run Interval

Only applies if Max Runs Per Item is greater than 1.

If you do intend to run the AI on the same item more than once, you can put a delay between the next assistant run for the item. The Min Run Interval is the period of time that must transpire between runs for the same item. For example, if you are re-processing a sale using the AI, and the attempt is declined, you want to reprocess it once again but 1 day later, you would set Max Runs Per Item to 2, and put a min run interval of 1 day. This ensures that on the first decline by the AI, the AI would not run the same item again unless at least 1 day has passed.

¶ Trigger: On Demand

The AI Assistant is activated either via API, within the web app or another AI Assistant thread.

¶ API

The AI Assistant trigger API method is available, allowing you to trigger an AI Assistant remotely. View the docs.

¶ Web

One or more item type(s) which will have the assistant button visible when viewing item details within the web app. Web access must be enabled. Read about Web Settings.

¶ Another Assistant

You can trigger an AI Assistant from within the thread of another AI Assistant.

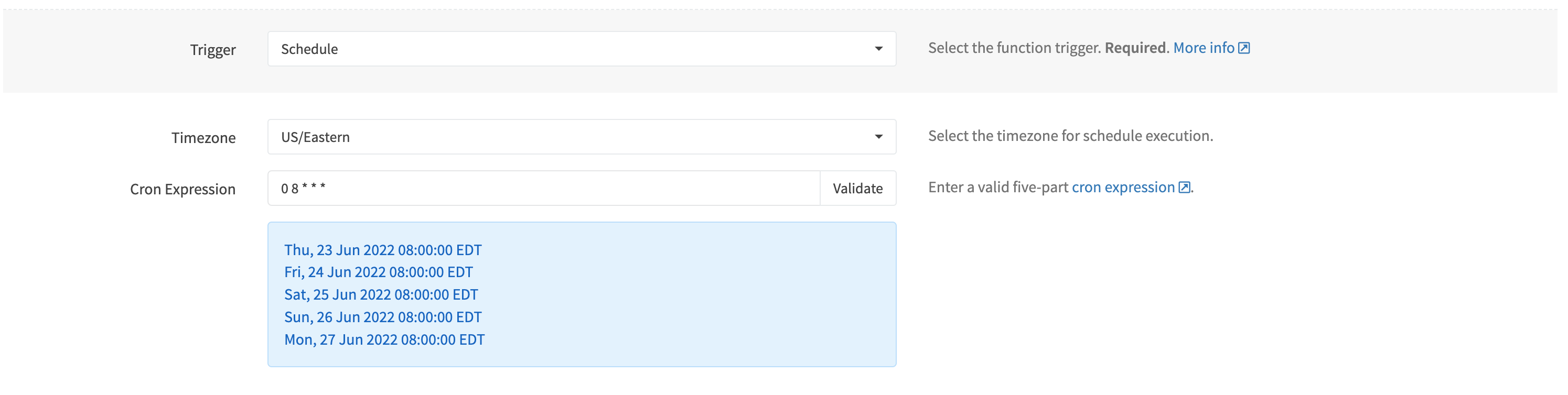

¶ Trigger: Schedule

The AI Assistant is activated on a fixed schedule using a cron expression. You must select a timezone and enter a valid cron expression.

¶ Timezone

Select a timezone for the cron expression. The timezone will determine the time when the AI Assistant is triggered.

¶ Cron Expression

The cron expression is the most important part of a Schedule trigger. You must enter a standard five-part cron expression:

- Minutes: 0-59

- Hours: 0-23

- Day of Month: 1-31

- Months: 0-11 (Jan-Dec)

- Day of Week: 0-6 (Sun-Sat)

We recommend using the validate feature before attempting to save the AI Assistant. The next 5 run dates will be displayed when validating.

¶ Important things to note

- Cron expression intervals cannot be less than 1 hour apart. I.e. cannot schedule an AI Assistant to run every 10 minutes, nor every 30 minutes, etc.

- Scheduled functions are checked every 10 minutes, so exact minute execution can have up to a 10 minute variance. If exact timing is important, we recommend scheduling a cron expression to run on the x10th minute of an hour, i.e. at 04:00:00, 11:10:00, 16:20:00, etc.

- Only standard five-part cron expressions supported, i.e.

0 8 * * *. The @ syntax is not supported.

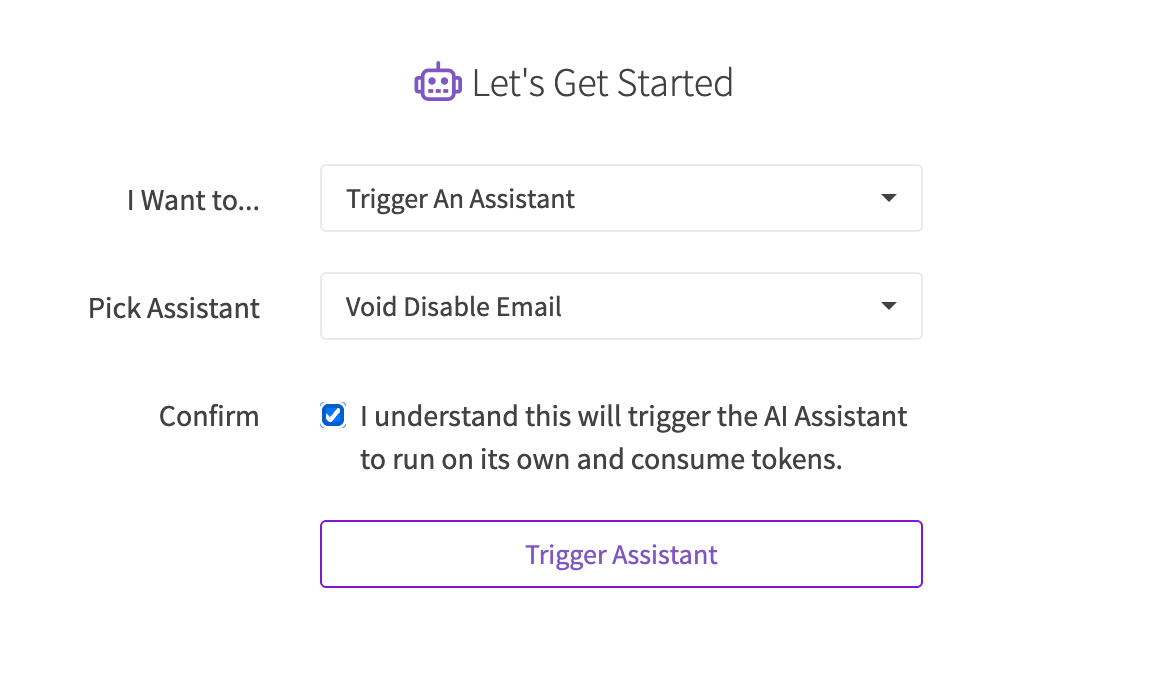

¶ Web Settings

An AI Assistant can be triggered by a user within the RevCent web app. This is useful if the AI Assistant performs a specific task such as sending an email or issuing a refund. You choose the specific item types and user types which can trigger the AI Assistant within the web app.

¶ Web Options

Below are the options available for web settings within an AI Assistant.

¶ Web Access

Enable or disable web access, giving users the ability to trigger the AI Assistant when logged into RevCent.

¶ Item Types

Select one or more item type(s) which will have the assistant button visible when viewing item details within the web app. You must select at least one.

¶ User Types

Select one or more user type(s) which will have the assistant button visible when viewing item details within the web app. You must select at least one.

¶ Max Tokens Per User

Limit the total number of tokens a web user can consume in a 24 hour period. 0 = no limit.

¶ Web App Trigger

When Web Access is enabled, the Item types selected will have an AI Assistant button present on the item details page. This gives web app users the ability to click the button and trigger an AI Assistant specific to the item they are viewing.

¶ Trigger Button

The AI Assistant button will appear on the details page of the item types you selected. If the button is clicked by a user the trigger modal will appear.

¶ Trigger Modal

The trigger modal will ask the user which AI Assistant they wish to trigger. Once triggered, the AI Assistant selected will run autonomously for the specific item the user is viewing.

¶ Thread Builder

Each time an assistant is triggered, it generates a unique thread within RevCent. The thread builder is where you design how the RevCent AI Assistant thread will run, including steps, tools and branching.

¶ Overview

The thread builder consists of nodes, which you connect in the order you want processed when the thread runs. Connect nodes to one another as a sequence of events in which the assistant interacts with the AI. Additional nodes are available to either wait a period of time or end the thread entirely.

¶ Start Thread

The start thread node only occurs once when building a thread. It is the starting point for the thread, i.e. where the thread begins to run after the trigger has occurred.

A start thread node will begin with a message the AI. The message to the AI is pre-determined based on the event and item type that triggered the assistant. For example, if the assistant is triggered by a new sale, the start thread node will first ask the AI to retrieve the details on the specific sale. The AI will retrieve the sale details and keep the sale details in the thread message history. This gives the AI the initial context and information it needs for the remainder of the thread, i.e. reference the sale in future thread messages without needing to retrieve the sale details again.

¶ Thread Step

The thread step node contains the message the AI will read.

¶ Step Message

The step message is sent to the AI instructing the AI what to do at the specific step in the thread. Write the message the same as you would for a human, being very clear and precise. The AI will read the message and determine what, if anything, to do based on your message.

You have the ability to insert an AI Prompt as a step message by selecting the prompt.

¶ Step Examples

Below are some example thread steps, showing what is possible by simply messaging the AI as if it were a human.

Step Example 1

- Step Message: “Review the sale and create a note indicating what was purchased. Also, find all functions and run the Analysis Function with all arguments .”

- Step Result: The AI will read the message and create the note with text it generates. The AI will also find all Functions, get the ID for the Analysis Function, which it will run based on the message telling it to do so. It will also compile the custom arguments set within the Analysis Function.

Step Example 2

- Step Message: “Read the existing customer notes. If the notes indicate the customer has called more than 3 times about a problem with their order do the following things: 1. void the sale. 2. Create a note indicating that the sale was voided due to the notes indicating the customer has called too many times. 3. Find the Instant Full Refund email template and send an SMTP message using the template with a customized apology for customer service not fulfilling their request.”

- Step Result: The AI will read the message, then review the existing customer notes. If the AI concludes the customer has complained too many times, it will issue void the sale with a note indicating why it was voided, and the AI will find the Apology Email Template and send an STMP message with a customized apology.

¶ Thread Branch

The thread branch node gives you the ability to have the AI choose an output path to take. This allows you to split a thread based on one or more conditions. You write the message for each output path as an individual condition. When the thread runs and reaches a thread branch node, RevCent will first format your output messages into a single conditional statement and send it to the AI. The AI will know it is a conditional statement and that it must choose only one output. The choice the AI makes determines the path the thread will take.

¶ Outputs

The are four possible outputs the AI can decide to take, three of which you set the condition for using a message. The fourth output is reserved as a catch all in case the AI does not choose from one of the outputs you set. You do not need to use all three outputs available to you, but you must use at least one.

¶ Output Message

Each output message you write is an individual condition, where if true, the AI should choose the specific output.

¶ Output Example

Here is a basic example of splitting a thread using a branch node.

Let's say you want to split the thread based on what was purchased in a sale. If the customer purchased a USB you want to branch on one path, if a Laptop a second path, if neither USB or Laptop then a third path.

You create the branch node with a different message for each output 1, 2 and 3:

- Output 1 message: “the customer purchased a USB”

- Output 2 message: “the customer purchased a laptop”

- Output 3 message: "the customer did not purchase a laptop or a USB.

RevCent will format your output messages (conditions) into a final statement meant for the AI. The AI will read the conditional statement RevCent created and determine the output result. For example, if a new sale is placed where the customer purchased a Laptop X400, the AI would determine that output 2 is the output result, thus splitting the thread at the branch nodes' output 2.

¶ Time Delay

The time delay node allows you to pause the thread for up to 3 days, giving you time to wait for specific updates that may occur related to the item that triggered the event. Minimum is 1 minute, maximum is 3 days.

Note: Threads are stored for a maximum of 7 days since created regardless of time delays. Once a thread is 7 days old it is permanently deleted.

¶ End Thread

The end thread node allows you to end the thread. May be useful for identifying when you do not wish to continue the thread. Any time a thread no longer has an additional node to continue to, it will self end.

¶ Usage Limits

Important: Limit the amount of tokens consumed by the AI Assistant to minimize costs. It is highly recommended that you set at least one of these limits.

- Max Tokens Per Day: Limit the total number of tokens that can be consumed by the individual AI Assistant within a 24 hour period. Provide a value in order to limit your daily cost for this assistant. Recommended value is specific to your budget and third party model pricing, i.e. model price per token * daily token limit. 0 = no limit.

- Max Tokens Per Thread: Limit each thread to a total max tokens. A thread is a conversation specific to an item or chat box interaction. Provide a value in order to limit a single thread from consuming too many tokens. Recommended value is 100000 for most use cases. 0 = no limit.

¶ AI Threads

When an AI Assistant is triggered, an AI Thread gets created. Each AI Thread is a specific run of an AI Assistant, determined by the thread builder design.

View all AI Threads by clicking AI > Assistants > Threads in the sidebar or go to https://revcent.com/user/ai-threads

¶ Thread Details

When viewing all AI Threads, or an item details page with AI Threads attached, click the AI Thread ID. The AI Thread details page contains all of the messages between the AI Assistant and the AI. It also includes details on timing, tokens and more. Easily view past AI interactions and fine tune your AI Assistant thread builder to fit your needs.

¶ AI Memos

An AI Assistant can create an AI Memo when you instruct the AI to create one within a thread step.

¶ View AI Memos

AI Memos are meant as an alert or instant notification. As soon as an AI Memo is created it will be available in the web app.

- New AI Memos will have a live instant notice show in the top right, similar to when new sales are created.

- Unread AI Memos alerts will appear in the left side navigation, similar to other alerts.

- View all AI Memos by clicking AI > Memos in the sidebar or go to https://revcent.com/user/ai-memos

¶ Why AI Memos?

AI Memos can be extremely useful by having the AI analyze your account activity for anything specific you wish to know about. Think of an AI Assistant as an individual viewing events as they happen, and anything important you specify will cause the AI Assistant to alert you via an AI Memo.

Have the AI Assistant create a memo when…

- A gateway is down: Tell the AI analyze to analyze a failed payment, specifically the gateways' decline response.

- A customer threatens a chargeback: Tell the AI to analyze a new customer note and determine if the customer threatened to file a chargeback.

- A renewal failed due to an expired card: Tell the AI to determine if the decline reason was due to an expired card, and if so create a memo.

- High percentage of declines: Have the AI run a transaction query and analyze the results. If the values are above a certain threshold, create a memo.

- Let your imagination run wild.

¶ Extend AI Memos

When an AI Memo is created it spawns an AI Memo Created account event. You can use this event to trigger other features in RevCent.

You can trigger:

- RevCent Function

- Email Template

- Another AI Assistant

¶ Developers

When implementing AI in functions or email templates, you will want to access the AI details. Any custom arguments will be contained within the custom_arguments object.

¶ Custom Arguments Object

The most important information contained is the custom_arguments object. The custom_arguments object will contain the argument name and AI output for each custom argument you added within either a Function or Email Template.

{

"custom_arguments": {

"customer_card_id": "XKob65Bn8qfqmWggp99B",

"customer_card_type": "VISA"

}

}

¶ Function Event Data

When the AI Assistant triggers a Function, the functions' event data will contain the item_details of the specific item being processed.

Access any custom arguments via the event.data.custom_arguments object.

{

"event": {

"data": {

"item_details": {

"id": "Q4nGRNpLgpS92QqGLwlX",

"first_name": "George",

"last_name": "Washington"

},

"custom_arguments": {

...

}

}

}

}

¶ Email Template Input Data

When the AI Assistant sends an SMTP message, the email templates' input data will contain the details of the item.

Access any custom arguments via the custom_arguments object.

{

"id": "Q4nGRNpLgpS92QqGLwlX",

"first_name": "George",

"last_name": "Washington",

"custom_arguments": {

...

}

}